The actual and promised benefits of AI on police forces’ operational capabilities do not often come without a cost. Scholars, practitioners, civil society organizations and policymakers are raising numerous concerns about the possible negative effects that law enforcement AI may have on individuals and society. For instance, AI systems can be biased and reinforce discrimination; the reasoning behind an AI output can be non-explainable and hard to challenge by defendants in court; or a generalized and untargeted use of AI systems can lead to a situation of mass surveillance and deter individuals from exercising their rights and freedoms.

To guide police and law enforcement agencies and minimize the risks their use of AI may pose, two instruments are often invoked: ethics and law. Let’s take a closer look at them.

Ethics

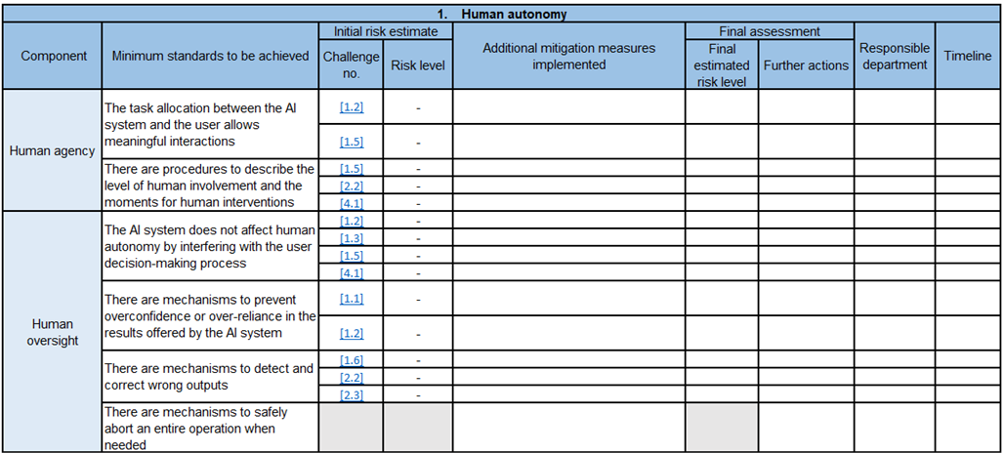

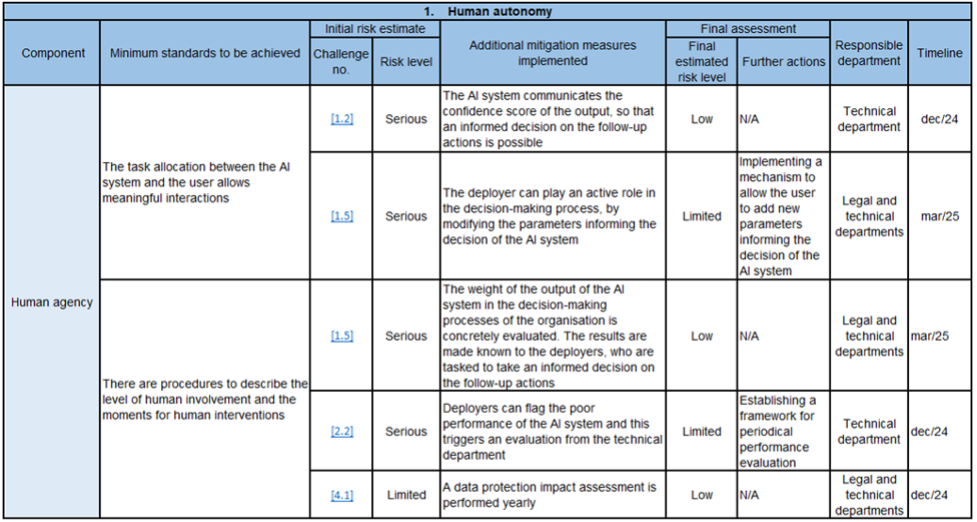

Starting from ethics, in 2019, the independent High-Level Expert Group on AI, set up by the European Commission, published its Ethics Guidelines for Trustworthy AI. There, the experts translated the broad principles of AI ethics into seven concrete requirements that should always be met by all AI developers and users, including law enforcement agencies. The requirements are the following: human agency and oversight; technical robustness and safety; privacy and data governance; transparency; diversity, non-discrimination and fairness; societal and environmental wellbeing; and accountability.

Law

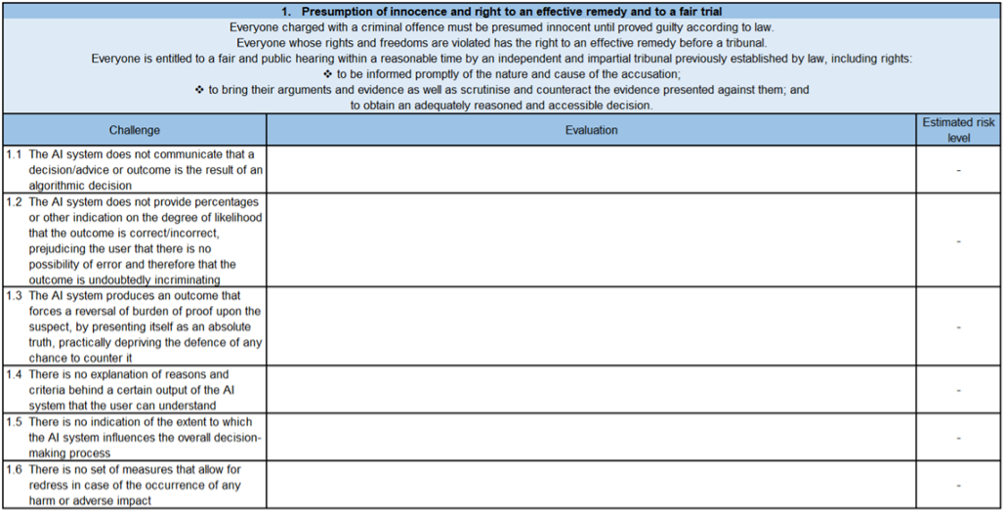

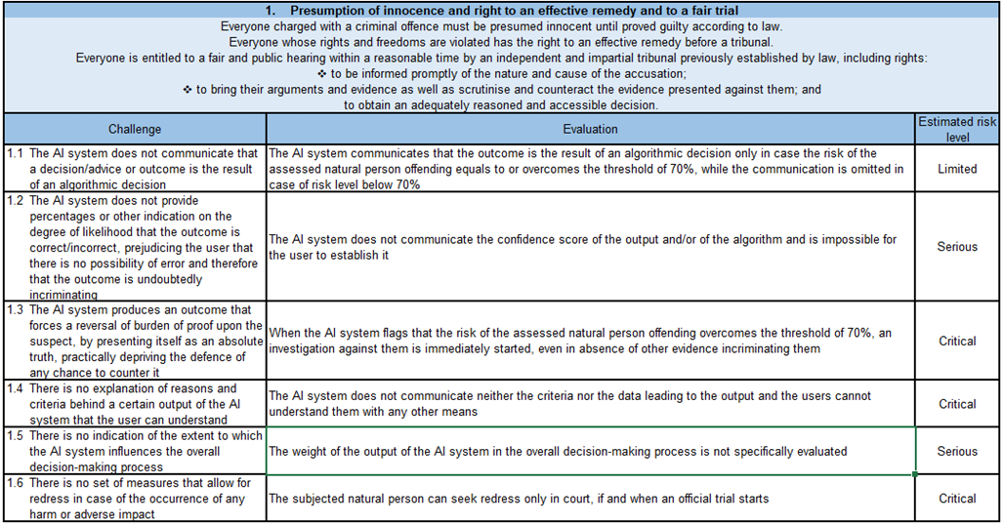

As for the law, in the first place, the fundamental rights of individuals enshrined in the EU Charter should always inform the development and deployment of all AI systems in the EU. In the context of law enforcement AI, the following fundamental rights are of paramount importance: presumption of innocence and right to an effective remedy and to a fair trial; right to equality and non-discrimination; freedom of expression and information; and right to respect for private and family life and right to protection of personal data. Moreover, since August 2024, the AI Act horizontally regulates AI systems placed or put into service in the EU market. However, while the AI Act establishes extensive obligations for AI providers, it devotes a more limited attention to AI deployers, namely those natural or legal persons using AI systems in the course of professional activities. As a result, the AI Act largely allocates the task of ensuring an ethical and legal deployment of law enforcement AI to the instrument of the fundamental rights impact assessment. Pursuant to Article 27 of the AI Act, deployers of high-risk AI systems who are also bodies governed by public law – such as police and law enforcement agencies – are obliged to perform an assessment of the impact on fundamental rights that the use of such AI systems may produce.

Undoubtedly, obliging police and law enforcement agencies to perform a fundamental rights impact assessment of the high-risk AI systems they are planning to deploy is a first step toward the objective of mitigating the risks posed by law enforcement AI. However, it is a step difficult to translate into practice: law enforcement agencies need an operational instrument suitable to be included in their AI governance procedures to allow them to comply with Article 27 of the AI Act.