What are the big social questions we should be asking around AI? That is a question Bram Allegaert (BrainBugs) also often asks and to which he still does not have a complete answer. Bram works within BrainBugs on developing serious games for social innovation and has been a member of the learning community network 'AI and ethics in practice' since its launch. We spoke to him briefly about his experiences with the learning community network so far.

Hi Bram, what prompted you to join the learning network 'AI and ethics in practice'?

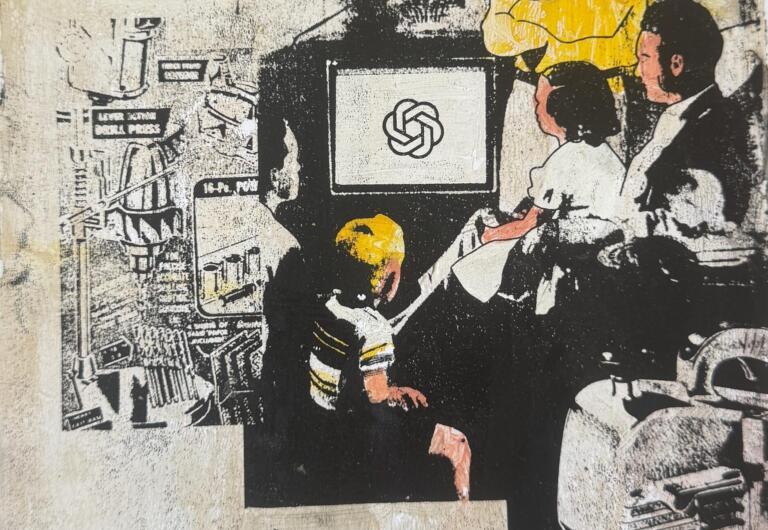

With BrainBugs, our serious game studio, we are currently developing a new game for schoolchildren about AI and the ethical questions involved. We notice that in many schools, the focus is mainly on the technical (how does it work? What software do we need?), and that these important social questions only come in second place. We want to change that with our new game on AI and ethics. The learning network 'AI and ethics in practice' is a good place to find inspiration and possibly a sounding board for our games.

What is valuable to you about the learning community?

With BrainBugs, we are sometimes an outsider within the network because we are such a small organisation that is mainly interested in the specific ethical questions. The topics discussed are often more about organisational-level ethics. But because the conversation is conducted in an accessible way, I can still follow along and we have also learnt things from this for our own organisation and how we use AI within our projects

For example, there was a very interesting session recently where a member of the network asked the question about what happens to the data you feed into Chat-GPT or other generative AI as a prompt. That was a good wake-up call for me to be careful with prompts.