European Commission - Digital Services Act

The Digital Services Act (DSA) defines a new set of rules for governing digital services in the EU. The DSA package regulates online digital platforms, including online marketplaces, social media platforms, search engines, video gaming platforms and other information society services and internet service providers.

It is a horizontal instrument establishing a framework of layered responsibilities targeted at different types of intermediary services. It will impose significant moderation and transparency requirements on many tech companies.

The purpose of the act is to:

- Better protect consumers and their fundamental rights online

- Establish robust transparency and a clear accountability framework for online platforms

- Foster innovation, growth and competitiveness within the single market

What: Legislation

Impact score: 1

For who: Government, companies, sector organisations, citizens

URL: https://eur-lex.europa.eu/lega...

Key takeaways for Flanders

- The DSA may create legal uncertainty and cause fragmentation in the research sector. For more context, see Science Europe

- The DSA will enter into force on January 1, 2024. In our country, no decision has yet been made as to which authority should enforce the DSA. This should be decided soon so that the future regulator in our country can prepare itself for the new tasks.

- There are still some loose ends in the DSA. For instance, there are still a number of questions around enforcement, but the Act also refers to delegated acts, implementing acts, potential codes of conduct and voluntary standards. Of these, quite a few still need to be developed. These include, for instance, how to determine the size of a platform, which additional voluntary rules for online advertising will come into use and which reporting obligations fall on platforms and regulators. An overview can be found here.

COVID-19 has highlighted the extent to which citizens, workers, consumers and businesses depend on digital services and online platforms. There are more than 10,000 platforms in the EU offering these services. 90% of them are SMEs. Because the digital service offer has become so important, the European Commission wants to use the DSA to control the handling of user content by intermediaries such as platforms. It offers an opportunity for the EU to set the global standard for online content governance.

The DSA upgrades and builds on the e-Commerce directive of 2000. It preserves key ideas and legal structures from that law and should be seen together with the Digital Markets Act. The DMA defines rules to impose on large tech companies to prevent them from engaging in unfair competition practices. Together, they should create a safer online environment and unify various divergent rules across countries.

The DSA mainly focuses on:

- Better protection for consumers and their fundamental rights online.

- It determines a clear liability regime and additional obligations (e.g. transparency) related to the spreading of illegal content.

- Foster innovation, growth and competitiveness within the single market

Who is covered?

The DSA applies to providers of intermediary services that conduct business in the EU, regardless of where the business is established, and have a substantial connection to the EU. Even small and micro companies are covered (although the regulations are tailored to size). A substantial connection exists when the provider:

- Has an establishment in the EU; or

- Has a significant number of users in the EU; or

- Targets its activities towards one or more EU member states

For imposing obligations, the DSA uses a layered approach:

- Providers of intermediary services offering network infrastructure (internet service providers, content distribution networks, web-based messaging services)

- Providers of hosting services (cloud and web hosting services)

- Online platforms and marketplaces (includes app stores, collaborative economy platforms and social media platforms)

- Very large platforms and search engines (platforms reaching more than 10% of 450 million consumers in Europe)

New set of obligations

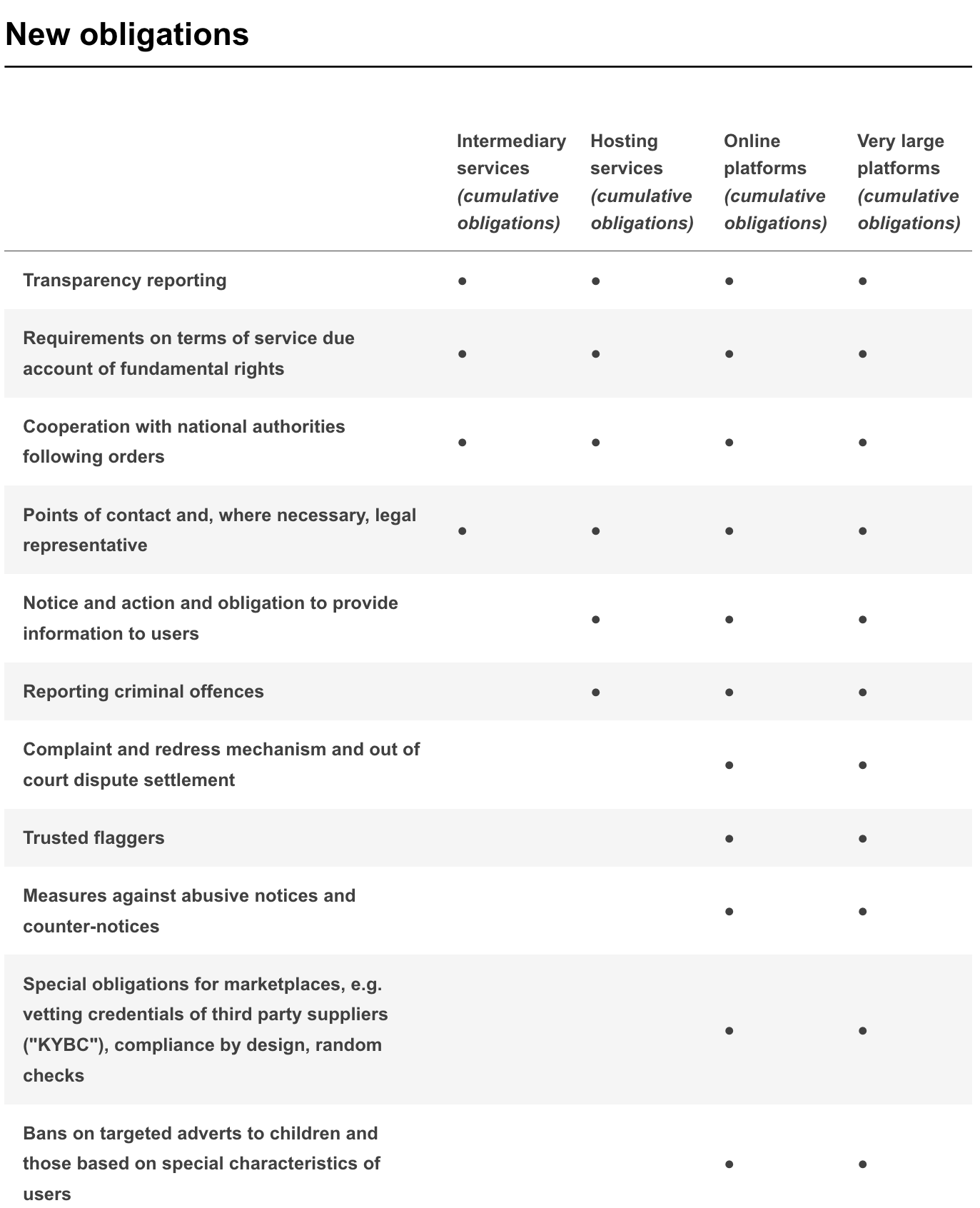

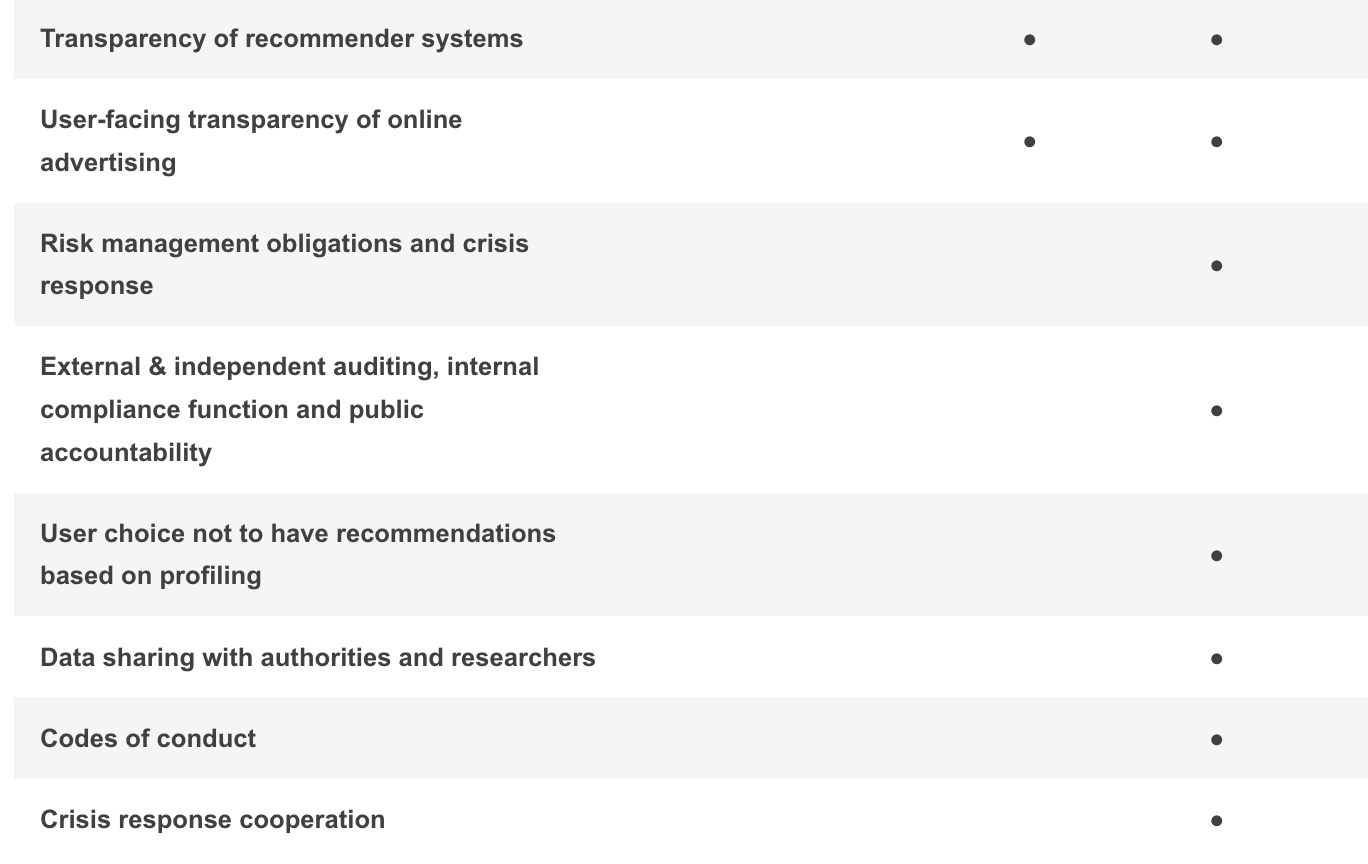

The DSA contains a new set of rules which set out how tech companies should deal with illegal content, by prescribing mandatory procedures and setting transparency requirements. The transparency obligations are adapted to the type and nature of the service concerned. This means that companies such as YouTube and Google will have much more transparency obligations than a simple hosting service. We outline the new rules for each ‘layer’ of providers below. The figure also gives a general overview of the new obligations.

Providers of intermediary services

The obligations include:

- Transparency reporting (publication of an annual report on content moderation efforts). The transparency reports must also describe any automated systems used to moderate content and disclose what their accuracy and possible error rate could be.

- Requirements on terms of service (restrictions that may impose on the use of their services)

- Cooperation with national authorities

- The introduction of two single points of contact (one to facilitate direct communication with the supervisory authorities and one for the recipients of the services) and a legal representative (for providers not established in the EU)

Providers of hosting services

Hosting services have an important role to play in tackling illegal content online, as they store the information provided by and at the request of the recipients of the service. They will be obliged to follow the same obligations as intermediary services, as well as obligations such as:

- Putting in place user-friendly notice and action mechanisms that facilitate third parties to notify the presence of allegedly illegal content.

- Providers should inform users of decisions to remove or disable information

- Reporting criminal offences to law enforcement or judicial authorities

Online markets and marketplaces

Regulations are more extensive for online platforms and marketplaces, which are required to follow the above obligations and also incorporate:

- The provision of an internal complaint-handling system and out-of-court dispute settlement mechanism for content moderation

- Individuals and organisations that have already proven that they handle rules correctly as well as have certain expertise are given extra credit. These persons are labelled trusted flaggers under the DSA. Online content that violates the new laws and regulations is crossed out by trusted flaggers and treated with priority. National authorities designate these trusted flaggers. Trusted flaggers must also comply with certain transparency requirements.

- Measures and protection against misuse (e.g. suspending the provision of the services)

- Online interface design: providers should design their online interfaces in a way that allows users to make a free and informed choice at all times (e.g. make the procedure to terminate a service more difficult than registering for that service or designs that reduce the risk of disinformation)

- Measures to increase transparency in relation to online advertising (who sponsored the ad, how and why does it targets a user,…)

- Transparency of recommender systems: set out in the terms and conditions the main parameters used in recommender systems, as well as options to modify or influence those parameters.

- Measures for the online protection of minors

There are special obligations for marketplaces (online platforms allowing consumers to conclude distance contracts with traders):

- Traceability of traders: the implementation of a Know-Your-Customer program

- Compliance by design: providers have to verify if traders provided certain information on the products or services before allowing traders to offer them on the online marketplace

- Right to information: inform consumers about illegal products or services

Very large platforms and search engines

- Big platforms and search engines (platforms with more than 45 million users) must comply with previous obligations, but must also follow additional rules

- Platforms and search engines will have to assess how their products may exacerbate risks to society and take measurable steps to prevent them. They also have to set up risk management mechanisms and crisis response systems

- Providers have to undergo annual external and independent auditing

- Provide at least one recommender system that is not based on profiling

- Set up a public repository on online advertisements that the providers display

- Requirements to appoint qualified compliance officers

- Data sharing with authorities and researchers

- Crisis response cooperation

The requirements the DSA lays on large platforms could provide a much more complete picture in future emergencies that play out online. A crisis mechanism in the law allows the European Commission to order the largest platforms to restrict certain content in response to a security or health emergency. These rules will have serious consequences for a platform such as TikTok, which has so far provided little insight into its internal workings.

Impact on users

What does the DSA mean for users of online services?

- The DSA will create easy and clear ways to report illegal content, goods or services on online platforms

- Users will be informed about, and can contest the removal of content by platforms

- Users will have access to dispute resolution mechanisms in their own country

- Terms and conditions of platforms will be more transparent

- More transparency will be created about vendors of the users of the product

- Bans on targeted advertisements on online platforms directed at minors

- Ban on targeted advertisements profiling individuals based on “sensitive” traits like their religious affiliation or sexual orientation, health information and political beliefs

- Ban on the use of misleading practices and interfaces (dark patterns): the use of manipulative designs that mislead people into agreeing to something they do not actually want.

- Platforms must provide affected users with detailed explanations if ever they block accounts, or else remove or demote content. Users will have new rights to challenge these decisions with the platforms and seek out-of-court settlements if necessary.

- Providers have to foresee clear information on why content is recommended to users

- Users of online services will have the right to opt-out from content recommendations based on profiling

Businesses

- Small players will have legal certainty to develop services and protect users from illegal activities and they will be supported by standards, codes of conduct and guidelines

- Small and micro-enterprises are exempted from the most costly obligations but are free to apply the best practices, for their competitive advantage

- Support to scale-up: exemptions for small enterprises are extended for 12 months after they scale past the turnover and personnel thresholds that qualify them as small companies

- Businesses will use new simple and effective mechanisms for flagging illegal content and goods that infringe on their rights, including intellectual property rights or compete on an unfair level

- Businesses have the opportunity to become ‘trusted flaggers’ of illegal content or goods, with special priority procedures and tight cooperation with platforms

- Enhanced obligations for marketplaces to apply dissuasive measures, such as "know your business customer" policies, make reasonable efforts to perform random checks on products sold on their service, or adopt new technologies for product traceability

Enforcement

Enforcement will be coordinated between new national and EU-level bodies. The Commission has direct oversight and enforcement powers over major platforms and search engines. Tech companies will have to publish a detailed report every two years on their moderation efforts, including the number of employees, their expertise, the languages spoken and the use of AI to remove illegal content. They will also have to report the number of accounts they have suspended and the content they have removed.

Regulators on the Member State level (so-called Digital Services Coordinator) will be responsible for the application and enforcement of the DSA. They will have far-reaching possibilities to investigate the operation of, for example, notice-and-action mechanisms or the application of algorithms. The fines that companies can get for failing to comply with the regulation can reach up to 6% of the annual revenue of the infringing business.

The DSA foresees the appointment of digital services coordinators in each member state and the creation of a European Board for Digital Services. It will also develop a high-profile European Centre for Algorithmic Transparency.

There are still some loose ends in the DSA. For instance, there are still a number of questions around enforcement, but the Act also refers to delegated acts, implementing acts, potential codes of conduct and voluntary standards. Of these, quite a few still need to be developed. These include, for instance, how to determine the size of a platform, which additional voluntary rules for online advertising will come into use and which reporting obligations fall on platforms and regulators. An overview can be found here.

Next steps

On October 4, 2022, the European Council gave its final approval to the DSA. The regulation will enter into force 20 days after its publication in the Official Journal of the European Union and become applicable on January 1, 2024. The obligations for very large online platforms and very large online search engines will apply four months after the DSA enters into force.