brAInfood: What about fairness & AI?

Date of publication: December 2022 / Checked if updates were needed: March 2024

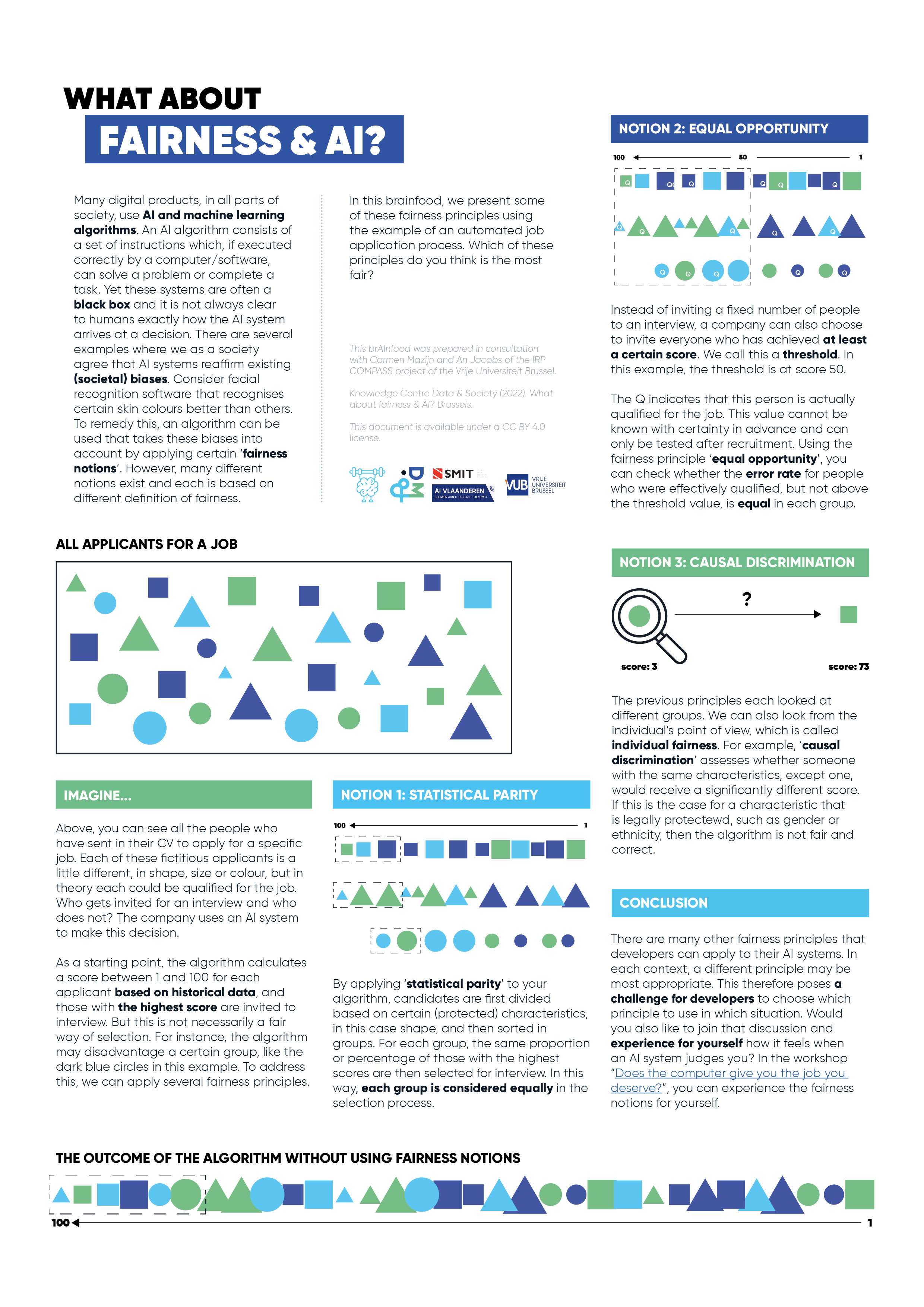

Many digital products, in all parts of society, use AI and machine learning algorithms. An AI algorithm consists of a set of instructions which, if executed correctly by a computer/software, can solve a problem or complete a task. Yet these systems are often a black box and it is not always clear to humans exactly how the AI system arrives at a decision. There are several examples where we as a society agree that AI systems reaffirm existing (societal) biases. Consider facial recognition software that recognises certain skin colours better than others. To remedy this, an algorithm can be used that takes these biases into account by applying certain ‘fairness notions’. However, many different notions exist and each is based on different definition of fairness.

In this brainfood, we present some of these fairness principles using the example of an automated job application process. Which of these principles do you think is the most fair?

This brAInfood was prepared in consultation with Carmen Mazijn and An Jacobs of the IRP COMPASS project of the Vrije Universiteit Brussel.

Content of the brAInfood

This brAInfood contains an explanation of..

- 3 different fairness notions,

- what is a threshold,

- and the difference between group fairness and individual fairness

...using visualisations and a fictional scenario of an automated job application process.

With brAInfood, the Knowledge Centre Data & Society wants to provide easily accessible information about artificial intelligence. brAInfood is available under a CC BY 4.0 license, which means that you can reuse and remix this material, provided that you credit us as the source.