Introduction

This card set has been designed to get you thinking about how you can use AI responsibly and inclusively in (health)care. The cards will help you uncover your blind spots: ethical pitfalls such as bias in the data. By identifying these early on, you increase the chance of AI having a truly positive impact on the standard of care provided.

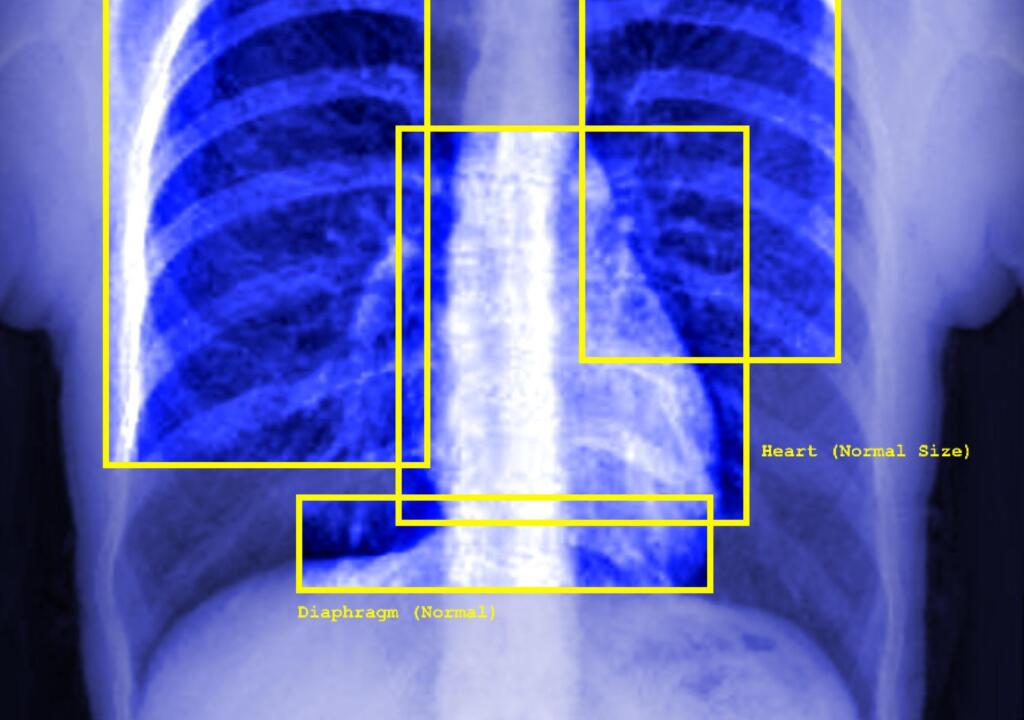

This card set focuses on AI applications that support (health)care processes, such as diagnostics. The Knowledge Centre Data & Society also has a separate card set on Generative AI. Use this one if you’d also like to consider the risks and challenges of GenAI.