OECD - OECD Framework for the Classification of AI systems

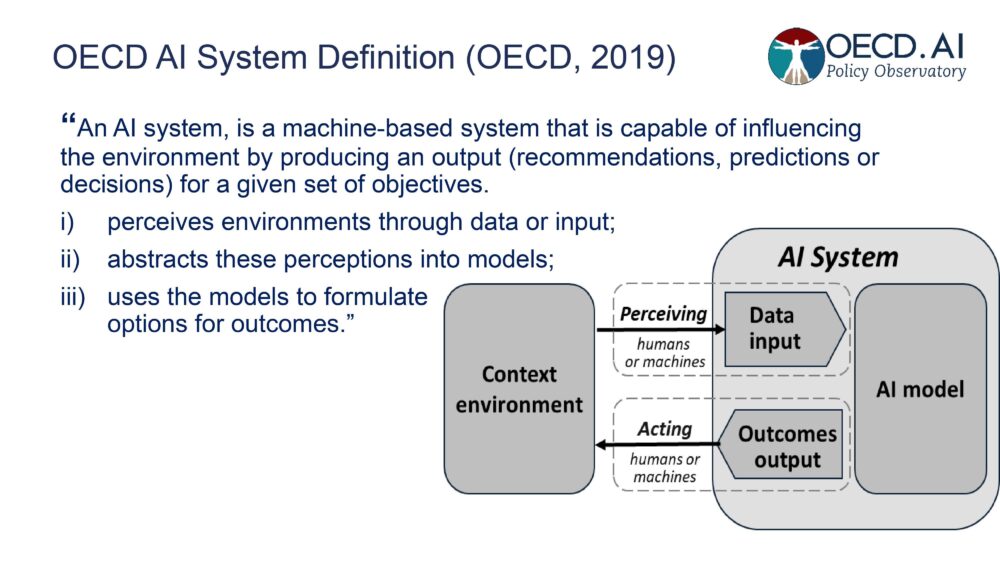

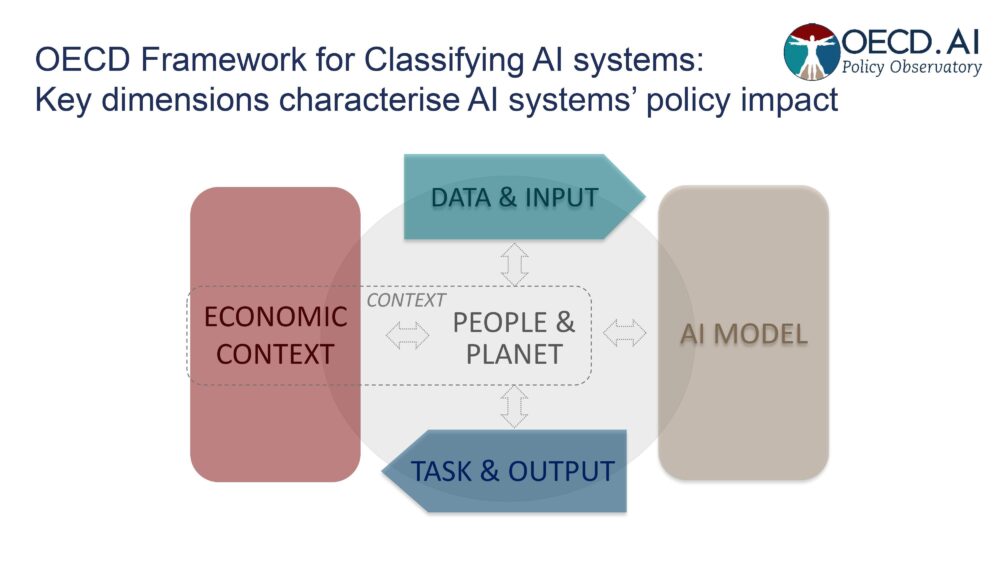

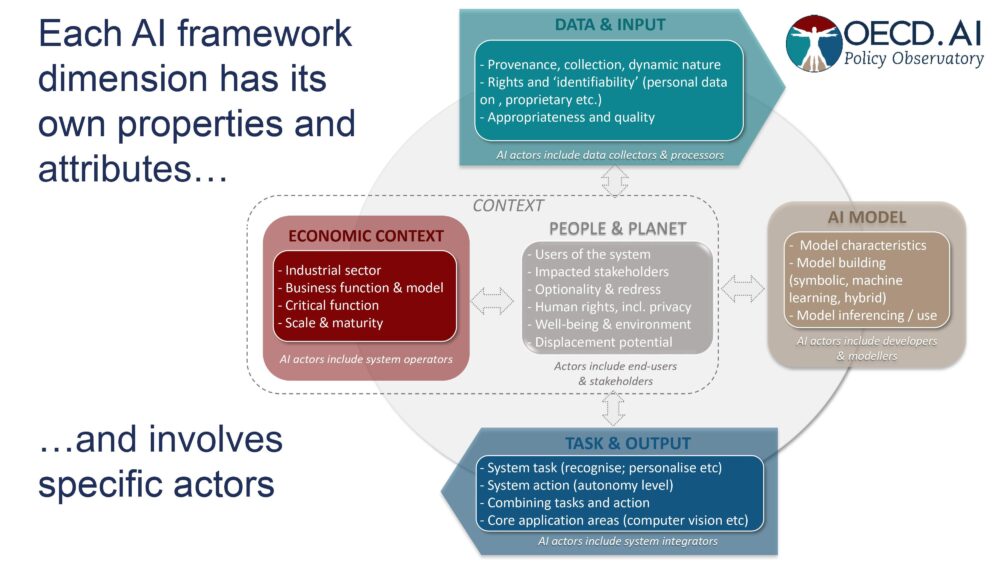

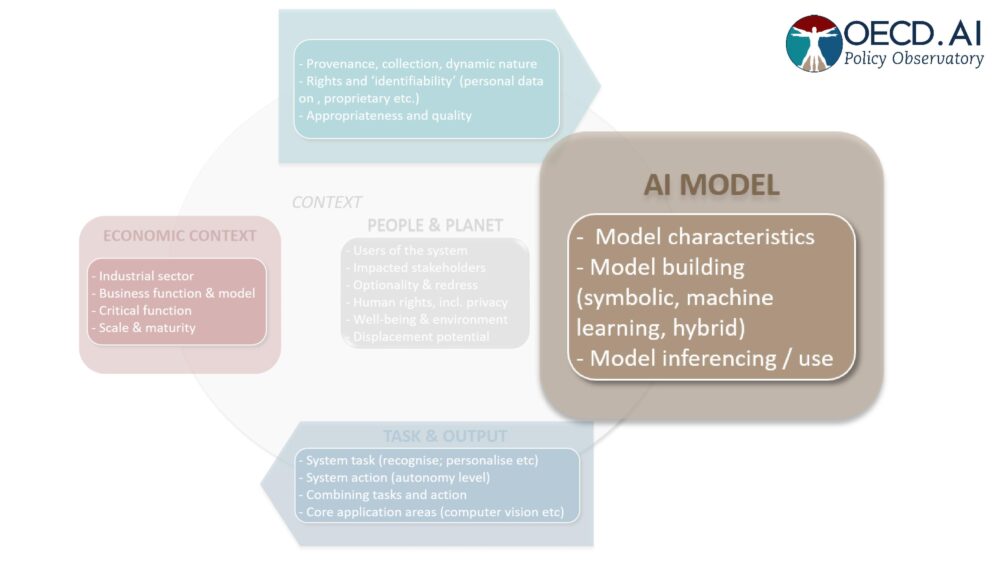

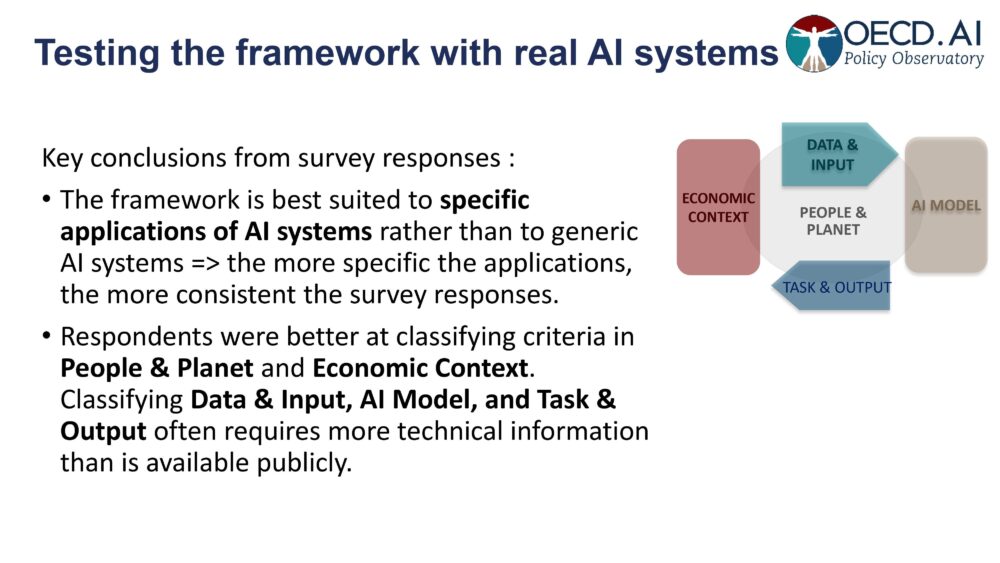

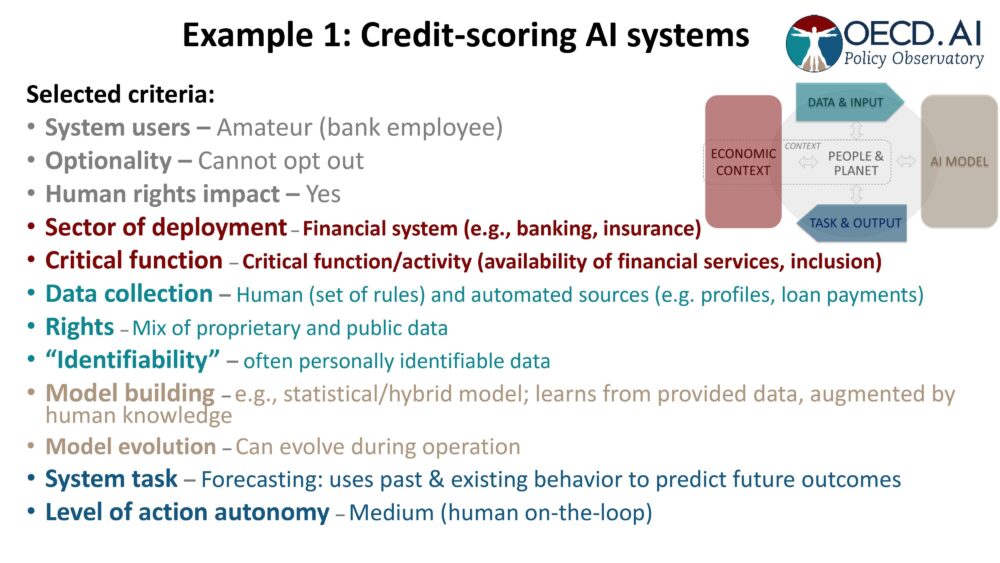

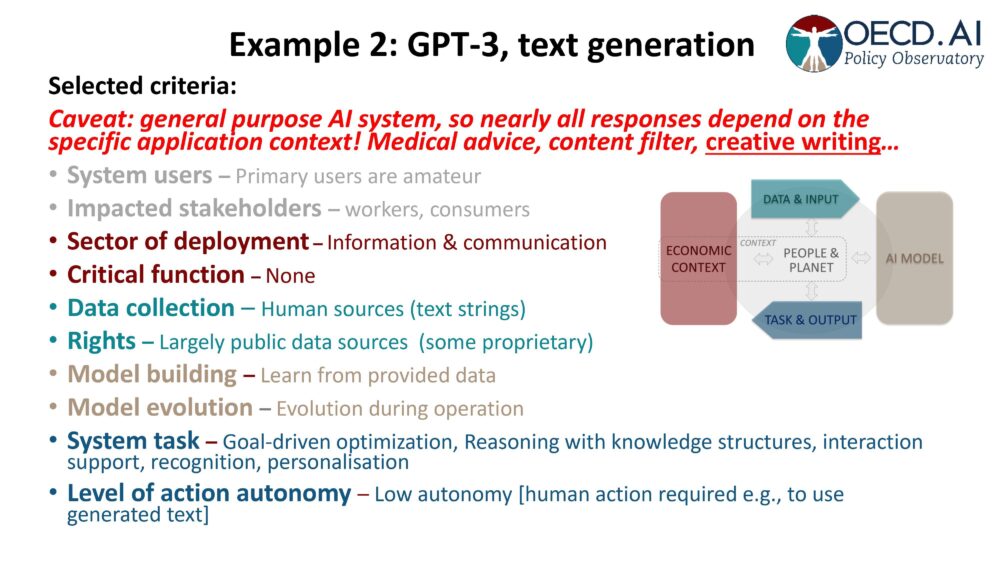

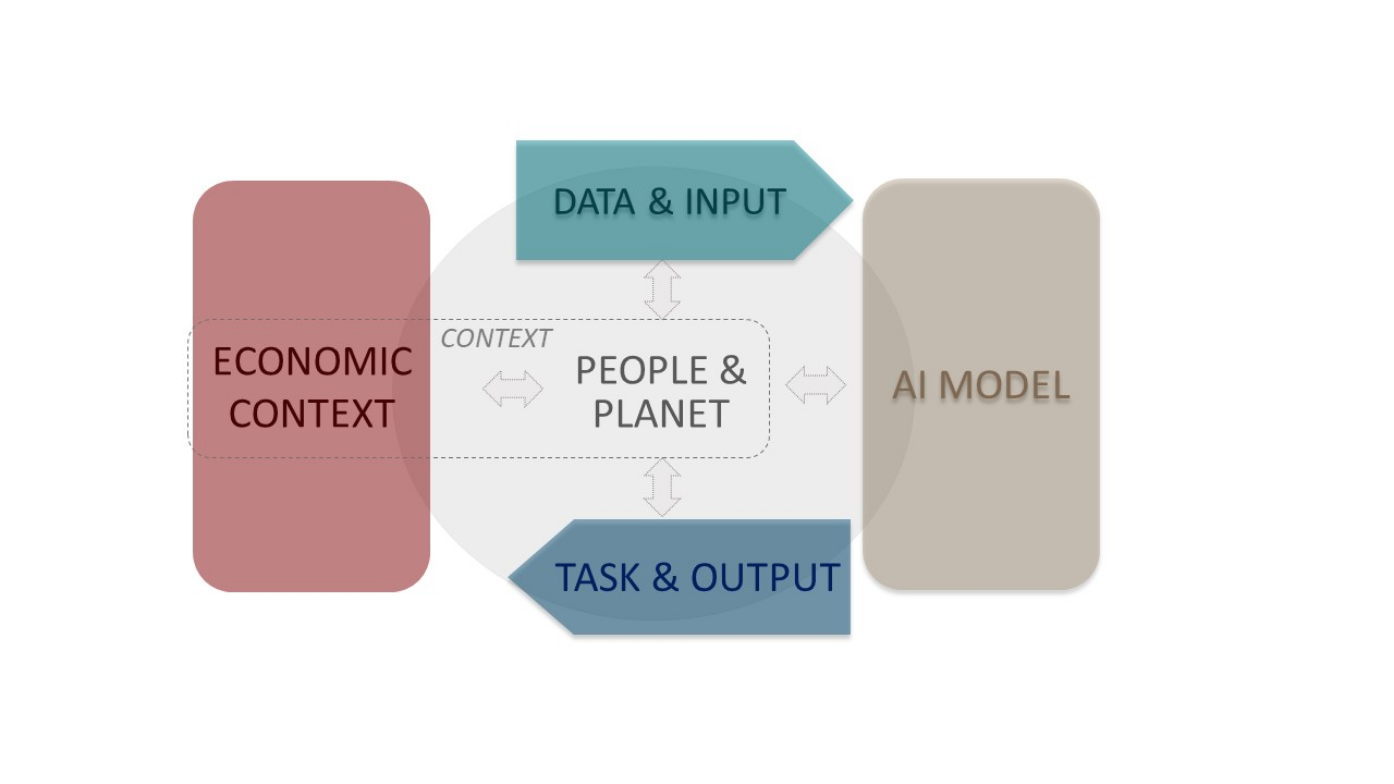

The OECD has proposed a classification process to critically review AI systems. The framework should help policymakers identify specific risks associated with AI, such as bias, explainability and robustness. The framework consists of five categories. It examines:

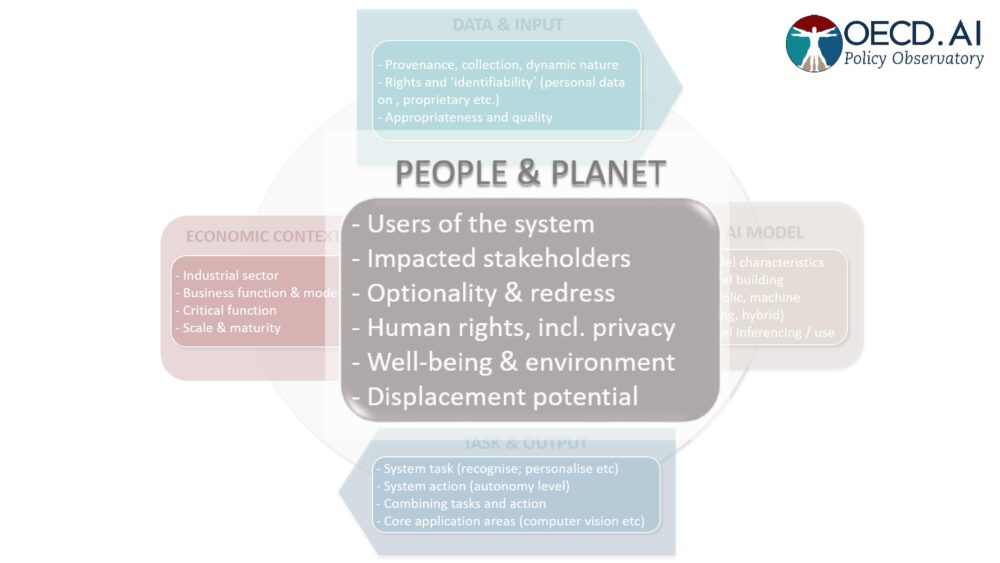

- How AI-systems influence people and the planet

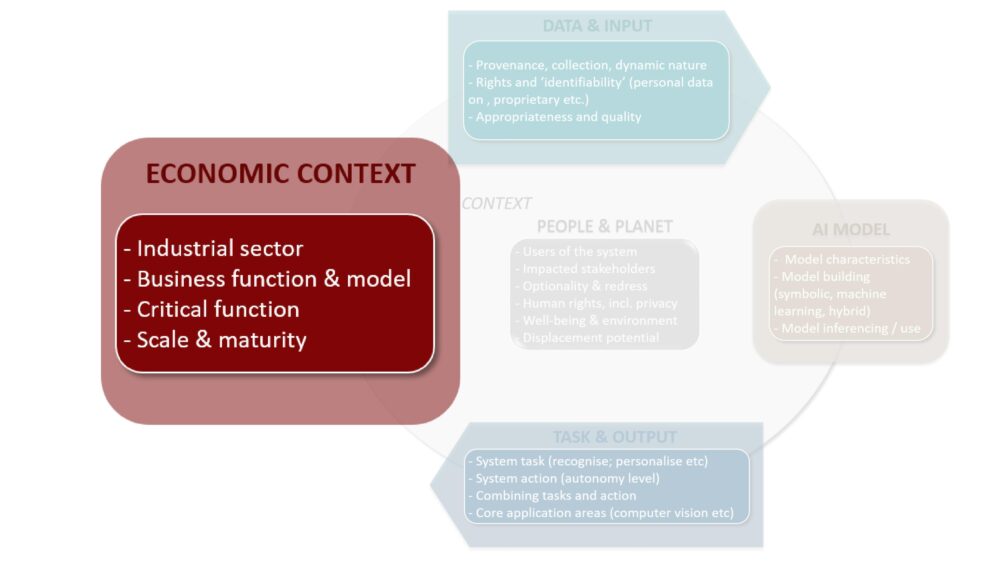

- How AI-systems influence the economic context

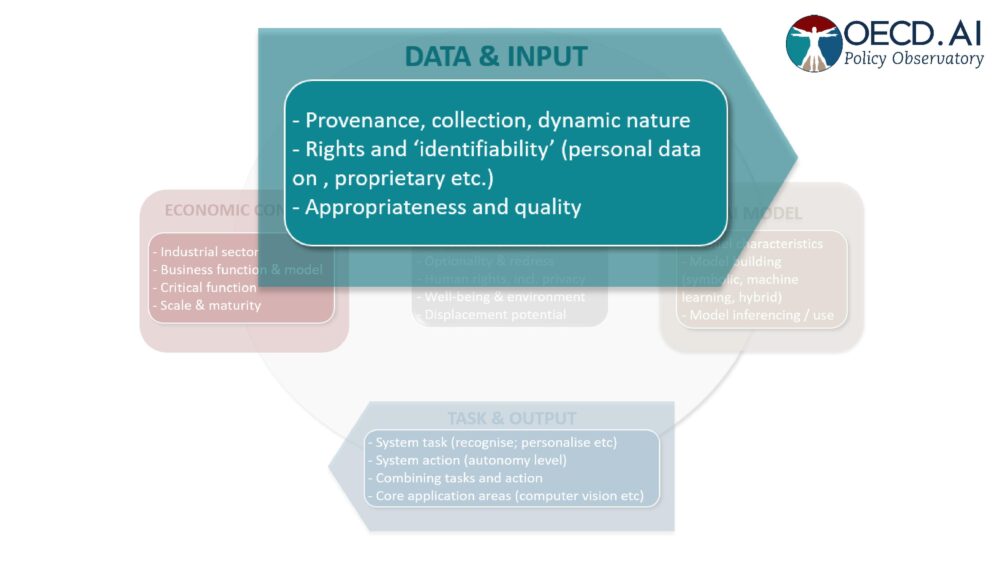

- Which data was used

- How the AI model functions

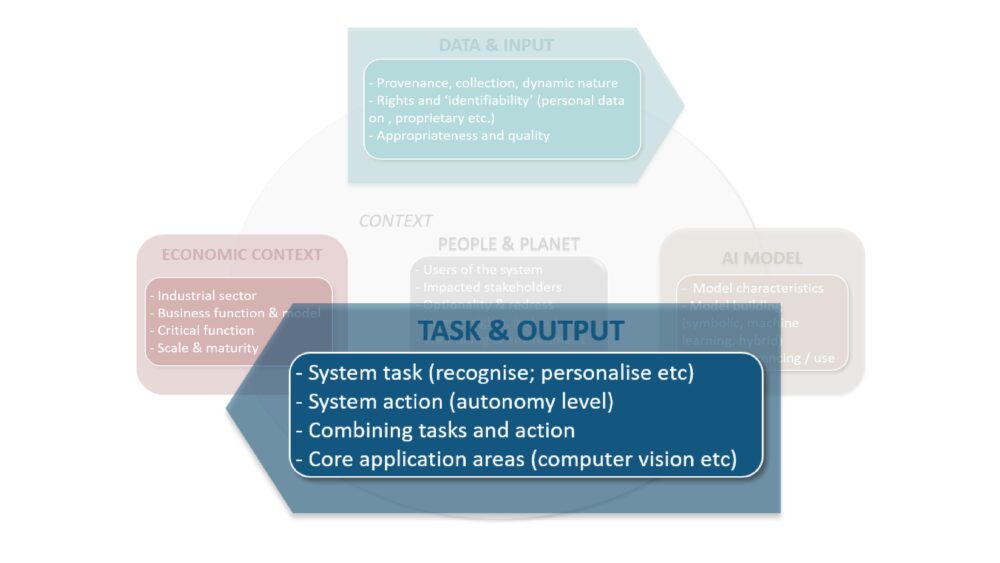

- What tasks the AI system performs

Through the framework, policy makers can more easily assess what can be considered risky in the context of AI.

What: Policy document

Impact score: 5

For who: governments, policy makers

URL:

The framework is a user-friendly tool to evaluate AI systems from a policy perspective and to help policymakers and legislators characterise AI systems deployed in specific contexts. It is not just about what AI systems are capable of, but also about where and how they put this into practice. For example, image recognition technology may be very useful for smartphone security, but when used in other situations, it may violate human rights.

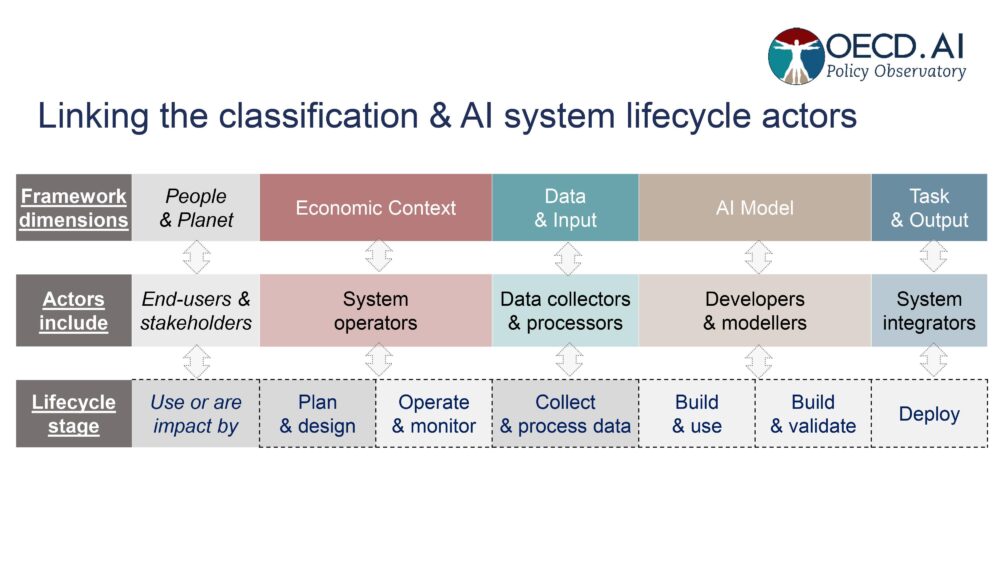

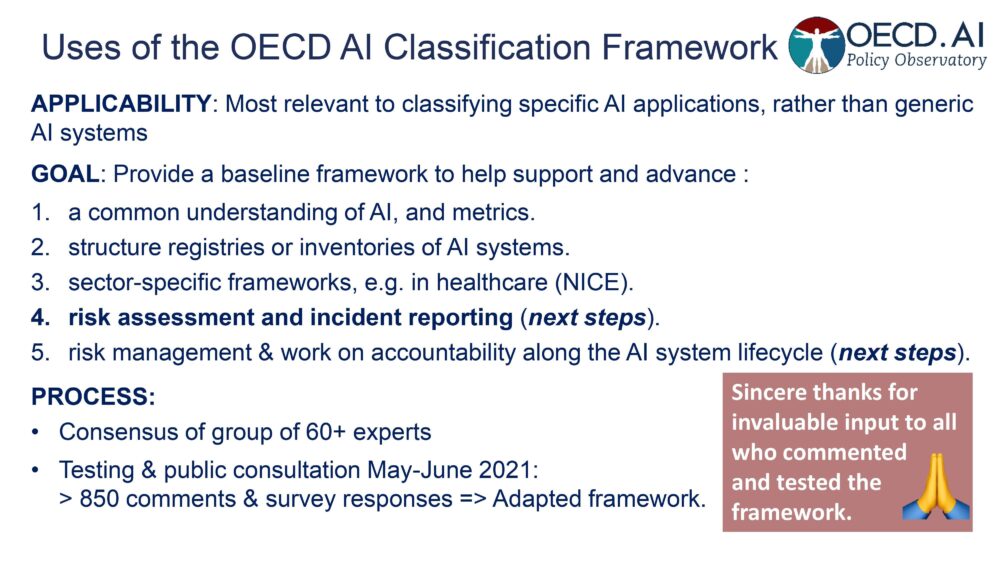

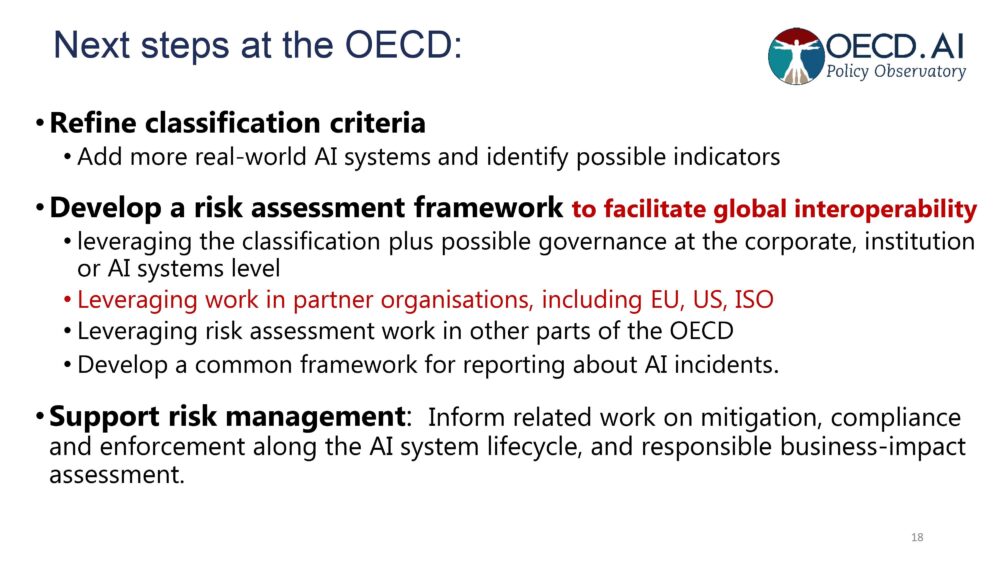

In particular, the framework provides a basis for:

- To promote a common understanding of AI: to identify characteristics of AI systems that matter most, to help policy makers and others tailor policies to specific AI applications, and to help identify or develop metrics to assess more subjective criteria (such as impact on well-being).

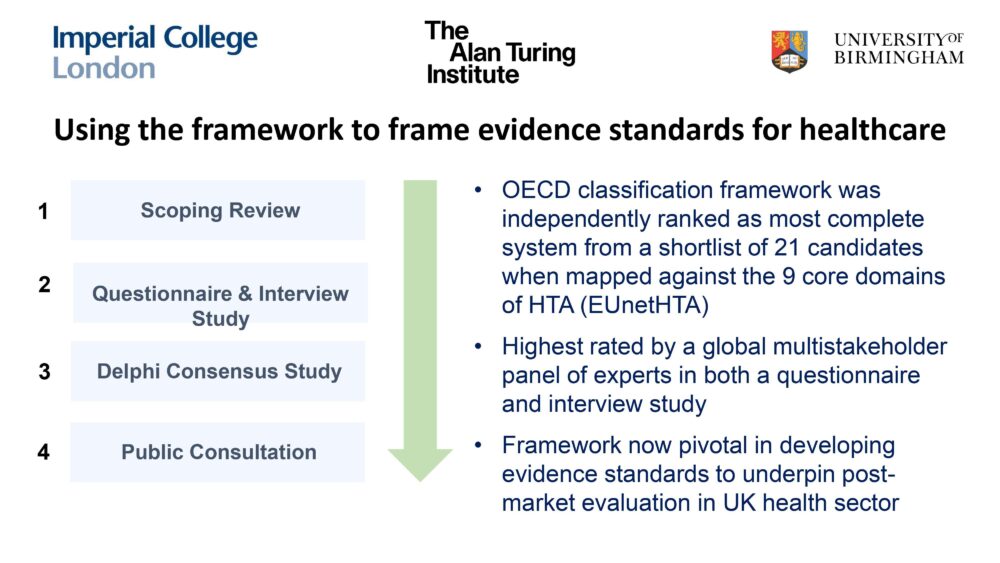

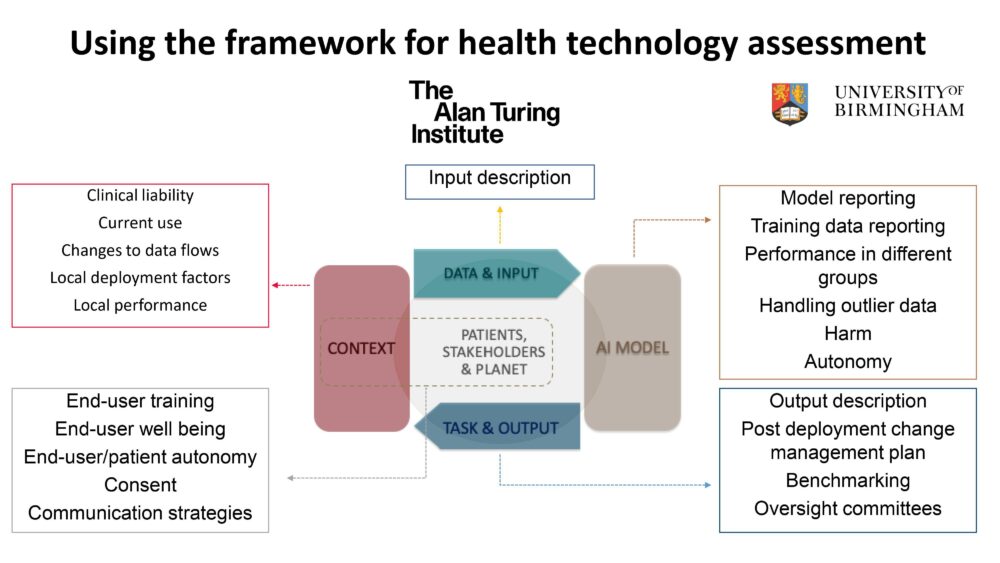

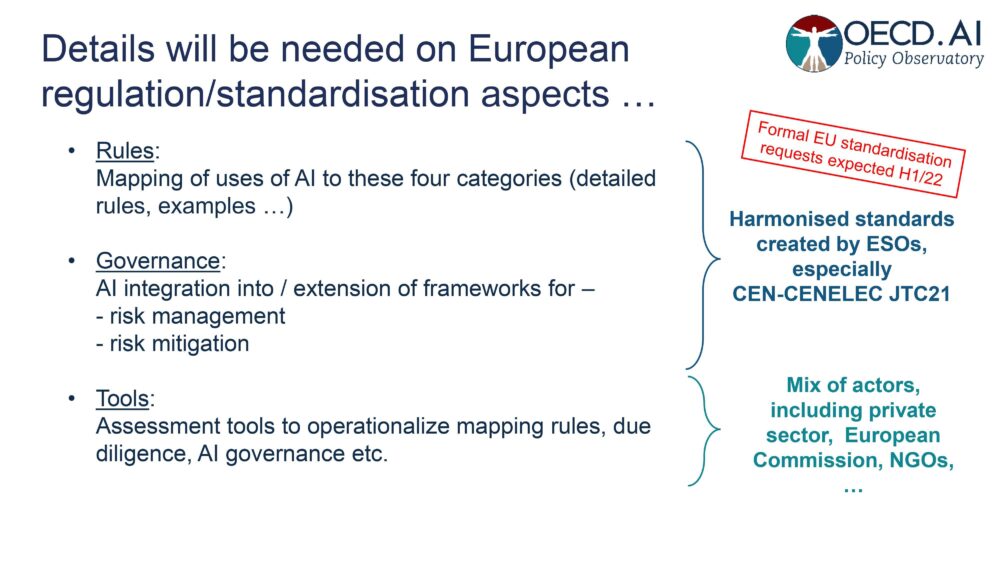

- Supporting sector-specific frameworks: Providing the basis for more detailed application or domain-specific overviews of criteria, in sectors such as healthcare or finance.

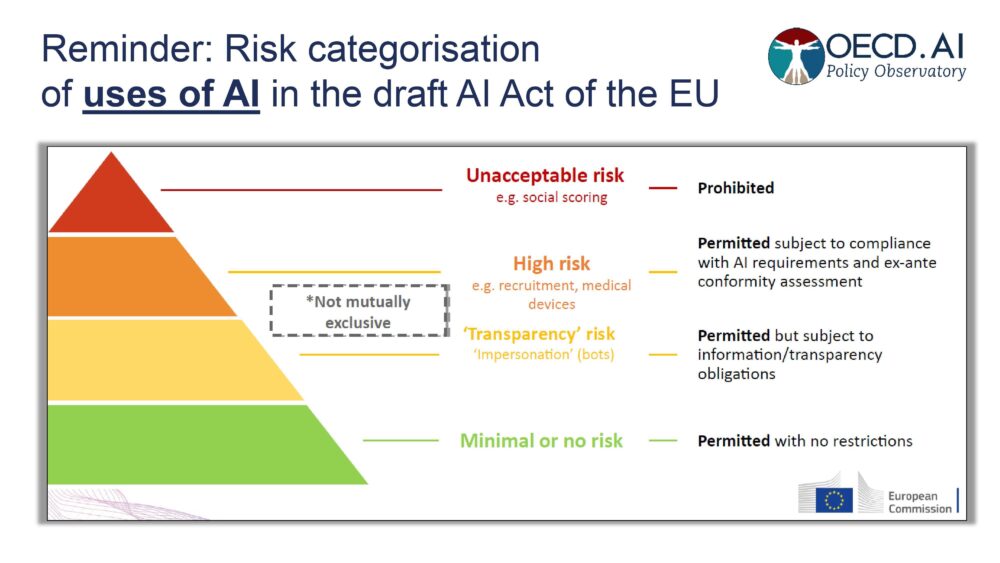

- Supporting risk assessment: Provide the basis for related work to develop a risk assessment framework that helps eliminate risks and mitigate their consequences, and to develop a common framework for AI incident reporting that facilitates global consistency and interoperability of reporting.

- Supporting risk management: contributing to the provision of information on risk mitigation, compliance and enforcement during the life cycle of AI systems, including in relation to corporate governance.

Watch the OECD presentation below: