USA - NIST AI Risk Management Framework

After several draft versions and public consultation rounds, the US National Institute of Standards and Technology (NIST) released their Artificial Intelligence Risk Management Framework (AI RMF) in January 2023. This framework aims to provide guidance to enable stakeholders to minimize the hazards and maximize the benefits from AI systems. To achieve this, the document identifies several characteristics that should distinguish trustworthy AI. The Trustworthy and Responsible AI Resource Center will support and operationalize the AI RMF.

What: paper/study (framework document)

Impactscore: 3

For who: AI-users and developers, policymakers, researchers, citizens,

URL:

Summary

In the United States, the National Institute for Standards and Technology (’NIST’) was tasked with drafting a framework surrounding the risk management of AI. The first version of the framework was published in January 2023. The AI Risk Management Framework (AI RMF) is a non-binding, general and living document, so that it has the flexibility to be implemented by various groups and organizations.

The framework consists of two parts. The first part discusses the material scope of the document and the characteristics of trustworthy AI. It also addresses the intended audience and the notion of risk. The second part lists four types of actions that framework users can take in order to manage AI risks in practice, in accordance with the RMF. These actions are govern, map, measure and manage risks.

Material scope

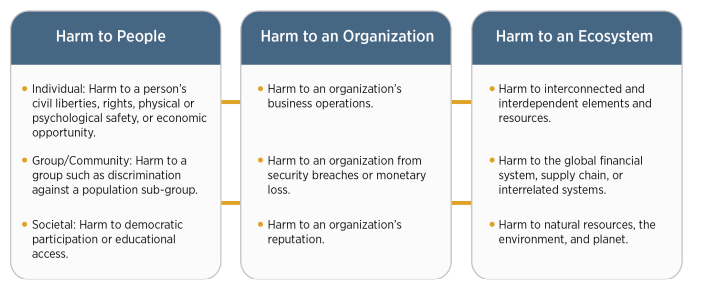

The RMF primarily frames various types of risks that can be included in the risk management.. This framework begins with the identification of a risk: “ Risk refers to the composite measure of an event’s probability of occurring and the magnitude or degree of the consequences of the corresponding event.” The NIST declared that he definition was kept quite broad, because it needs to be flexible enough to cover all risks related to AI as they emerge. Also, risks can differ depending on the target group, e.g. harm to an individual’s civil liberties or harm to natural resources in the ecosystem.

After the risk has been identified, it is measured based on various criteria, such as the stage of the AI-lifecycle, the real-world setting, the human baseline, etc. However, in some cases the RMF allows for risk tolerance if the intended goal requires it. Companies have the possibility to determine their own risk tolerance, which can depend on their legal or regulatory context (e.g. related policies and norms established by AI system owners or users, organizations, industries, communities, or policy makers). Following these steps, risks are prioritized based on the potential impact of the AI system. Again, this prioritization process is very contextual and thus case-specific.

Intended audience

The RMF emphasizes the relevance of the intended audience that will mitigate the risks. Every actor within the AI lifecycle fulfills a different role and can, thus, have a different impact on the hazards that arise within that specific domain, e.g. data is validated and checked during the input-process, operators check the legal and regulatory requirements, etc. In summary, “successful risk management depends upon a sense of collective responsibility among AI actors (…).”

Characteristics of trustworthy AI

The recognized risks then have to be assessed, which can be done on the basis of certain criteria that the US NIST has laid down in the RMF as “characteristics of trustworthy AI”. These include:

- Validation: objective confirmation that the requirements have been fulfilled

- Reliability: guarantee that the AI system will perform as required, without failure

- Accuracy: the results of the AI system have to be factually correct

- Robustness: ability of the AI system to maintain its performance under certain conditions

- Safety: the AI system may not endanger any human life, property or environmental element

- Security: protection against violations concerning the confidentiality, integrity or availability of the AI system

- Resilience: the ability to function normally after unexpected hindering of the performance

- Transparency: providing the necessary information to people interacting with the AI system

- Accountability: responsibility for the outcomes of the AI system

- Explainability: representation of the underlying mechanisms to contextualize the AI system

- Interpretability: explaining the AI system in the intended context of their functioning

- Privacy-enhancement: safeguarding privacy values such as anonymity and confidentiality.

- Fairness: recognition and consideration of equity by avoiding harmful biases

All users of the RMF are encouraged to inform the US NIST of conducted evaluations involving these criteria, enabling the NIST to regularly assess the effectiveness of the RMF.

Core and profiles

Another aspect of the RMF revolves around four distinct functions (or types of actions) aimed at assisting companies in tackling these risks in real-world scenarios. The first function is to govern, which entails implementing an overarching policy concerning AI risk management which should facilitate the other three functions. The second function is to map, focused on the identification and framing of risks in a case-specific setting. The third function is to measure, meaning the assessment and analyzing of the identified risks. Lastly, the managing function aims to prioritize, monitor and resolve the risks at hand.

NIST also provides some case profiles, showing how the RMF can be operationalised in certain sectors or industries, such as a hiring use case or a fair housing use case.

Related developments

NIST has stated that it will continue to update the RMF in order for it to comply with the latest technology, feedback and standards. In this regard, it is relevant to mention the tension between the RMF and the upcoming EU AI Act, since both aim to standardize their assessments on an international scale, but with different approaches: the EU adopts a more horizontal approach, while the US takes on a more vertical approach. Previously, the US administration argued that complying with the standards of the NIST RMF should be considered an alternative way to comply with the self-assessment as set out in the EU AI-Act (https://www.euractiv.com/section/artificial-intelligence/news/eu-us-step-up-ai-cooperation-amid-policy-crunchtime/).

Though recently, the US- and EU-lawmakers have agreed to create an AI Code of Conduct to overcome their differences and create a first step towards transnational AI governance: https://www.ced.org/pdf/CED_Policy_Brief_US-EU_AI_Code_of_Conduct_FINAL.pdf

For more information regarding this subject, visit this link: https://data-en-maatschappij.ai/beleidsmonitor/handels-en-technologieraad-eu-vs-ttc-ttc-joint-roadmap-for-trustworthy-ai-and-risk-management .