Aequitas

The Aequitas tool performs an audit for your project. This method is intended to analyze whether there are prejudices in the data and in the models you use. You can perform the audit via the desktop or online tool.

The method was developed by the Center for Data Science and Public Policy. This research center aims to stimulate the use of data science in public policy research and practice.

What you should know before reading further:

- For whom: developers, policy makers

- Process phase: problem analysis and ideation, evaluation and iteration

- System component: entire application, users, context AI system

- Price: freely available

Two types of audits

An initial audit examines whether biases in actions or interventions have occurred in a manner that is not representative of the population. This means that it is checked whether the actions or interventions are not unevenly distributed. For example, choices in the data collection may make a certain population group invisible. As a result, another group receives more attention than is fair. An example of this can be found here.

The second audit examines whether there is a prejudice about a part of the population due to incorrect assumptions of the AI system. A concrete example of this would be when an AI system develops for assessing creditworthiness makes the assumption that shopping at a night shop is a sign of unreliable behavior. This assumption is unfair when it turns out that the night shop is sometimes the only place where food can be bought.

Preparation

This toolkit consists of an online and an offline version. When using the online version, you need a clear description of the population and subgroup you want to view (think of race or gender). It is also necessary to have information about the results of the system, so a view of the actions that the system recommends towards certain people. This type of audit is often done afterwards, so it is recommended to work with results that result from actual use of the AI system.

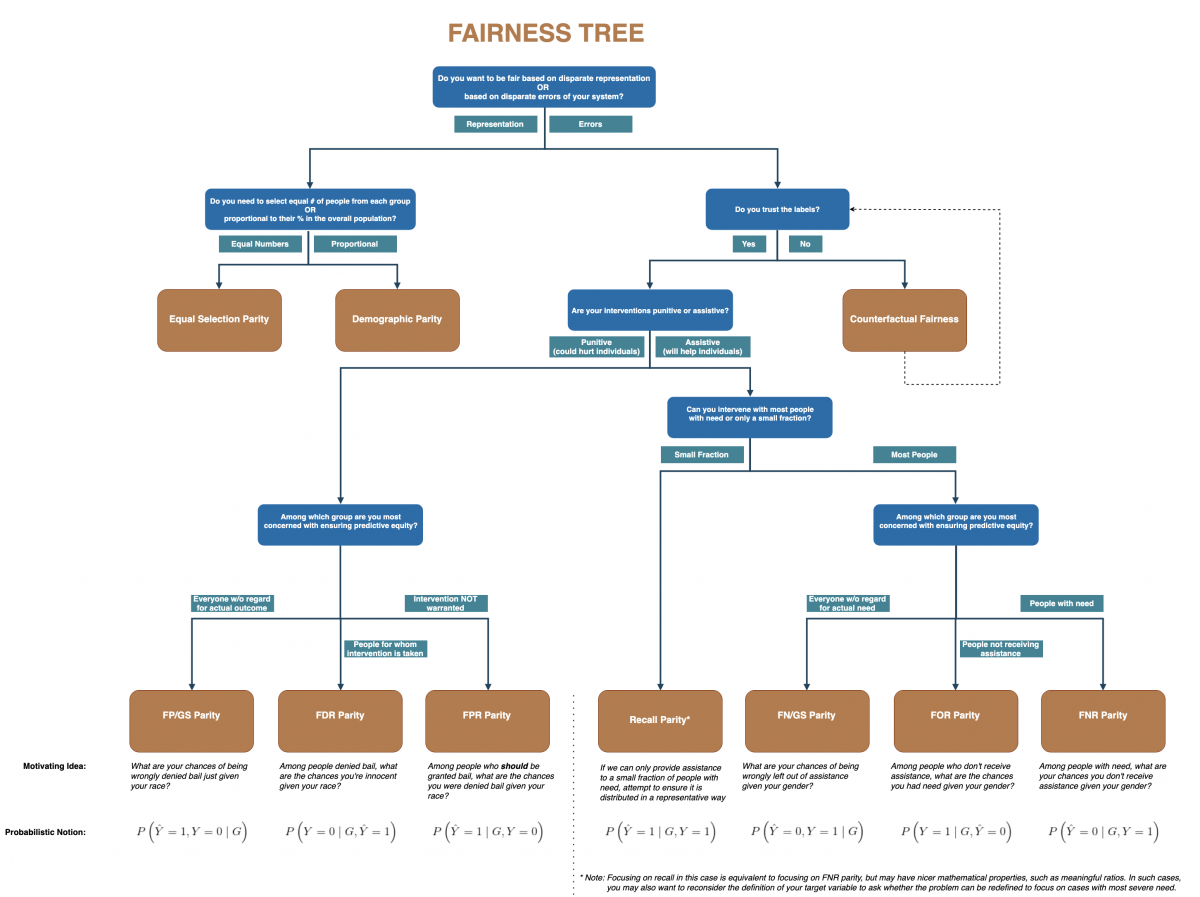

The tool offers the option to do the audit offline, via the desktop version or in python. In any case, the data must be prepared for this audit. Furthermore, there is also a decision tree that you can use to determine which type of equality / audit is suitable for your application. The decision tree shown below can help you with this. You can also find it here.

Method

The system consists of decision trees that can help determine which kind of fairness you would like to achieve, an example of this is displayed above. Furthermore, there is a toolkot available which can provide an audit report and several digital solutions (such as codes that can be added to your own work in python to achieve fairness) these can assist to identify fairness in your data during programming.

Result

You can either be kept informed of the degree of equality in the data during the development of the application, or you can have an audit report drawn up on the basis of data afterwards via the online or desktop tool.

| Values as mentioned in the tool | Related ALTAI-principles |

|---|---|

|

|

|

|

Link

This tool was not developed by the Knowledge Center Data & Society. We describe the tool on our website because it can help you deal with ethical, legal or social aspects of AI applications. The Knowledge Center is not responsible for the quality of the tool.