Workshop: Fairness notions in action

Does the computer give you the job you deserve?

In this workshop you can experience the different possibilities for an algorithm to make a selection in a fair way (or not).

Machine Learning algorithms are used for many different purposes in society. Still, these systems are often a black box of which it is not clear how decisions are made. There are plenty of examples of AI systems that (re)confirm existing biases in society. Think about face recognition software which is better able to detect certain skin colours over others. To prevent these biases from happening, computer scientists can make an algorithm that takes these biases into account by using different kinds of 'fairness principles'. However, there is no clear consensus on what these principles are. Everyone has a different opinion on what is the most fair. In this workshop you can experience yourself how an AI algorithm can select people in a fair way. The different principles will be explained, experienced and discussed during the workshop. What do you think is the most fair?

The workshop is based on the fairness notions as described by Makhlouf et al. (2021). Do you want to read more about them? You can find the article here.

Practical details

Type: physical workshop

Time: 1,5 hours

Age: 16-99 years old

The fairness notions

Here you can read more about the different fairness notions we will discuss during the workshop.

Input data

These are the people who have send in their CV to apply for the job.

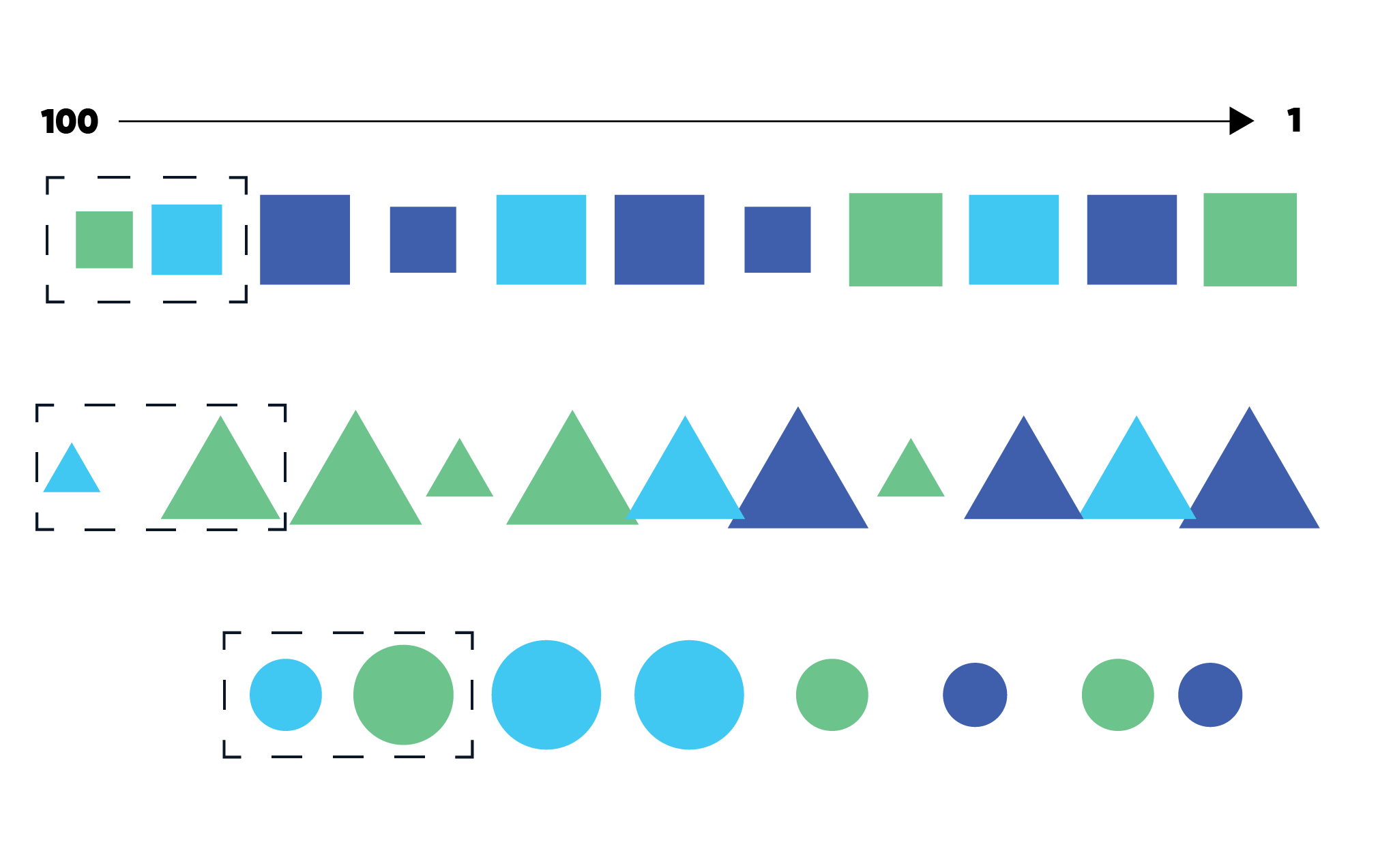

The algorithm

The algorithm calculates a score between 1 and 100 based on historical data. The people with the highest score will be invited for a job interview.

Statistical parity

By applying statistical parity, the candidates are sorted based on certain (protected) characteristics, such as shape. This way you can make sure that the same amount of people of each group will be invited for a job interview and that none of the groups is disadvantaged.

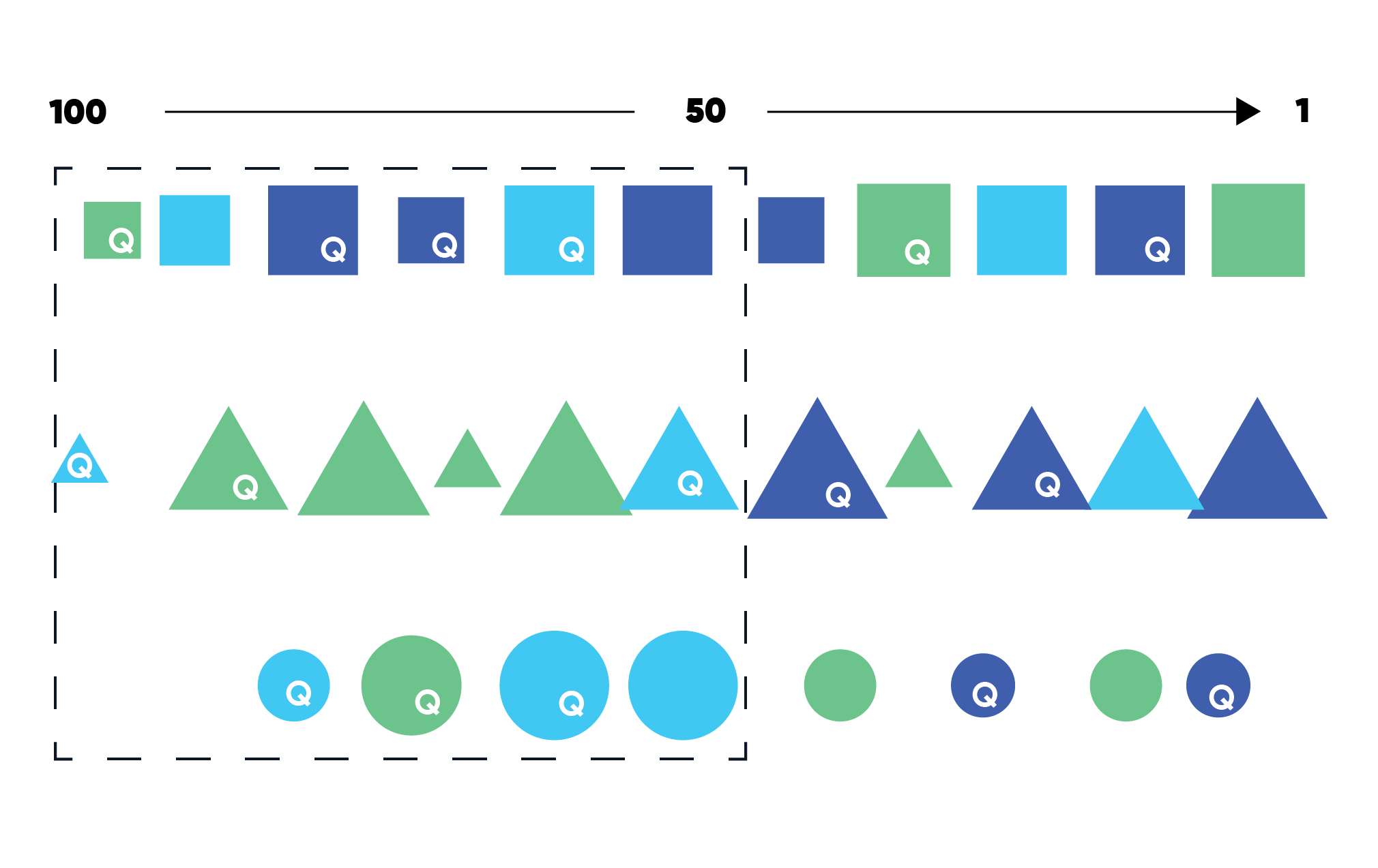

Equal opportunity

Instead of inviting six people to interviews, a company can also choose to invite everyone who has achieved a certain score or more. We call that a threshold value. In this example, the threshold is at score 50.

The Q indicates that this person is actually qualified for the job. Using the fairness principle equal opportunity, you check that the margin of error for people who are qualified but not above the threshold is similar in each group.

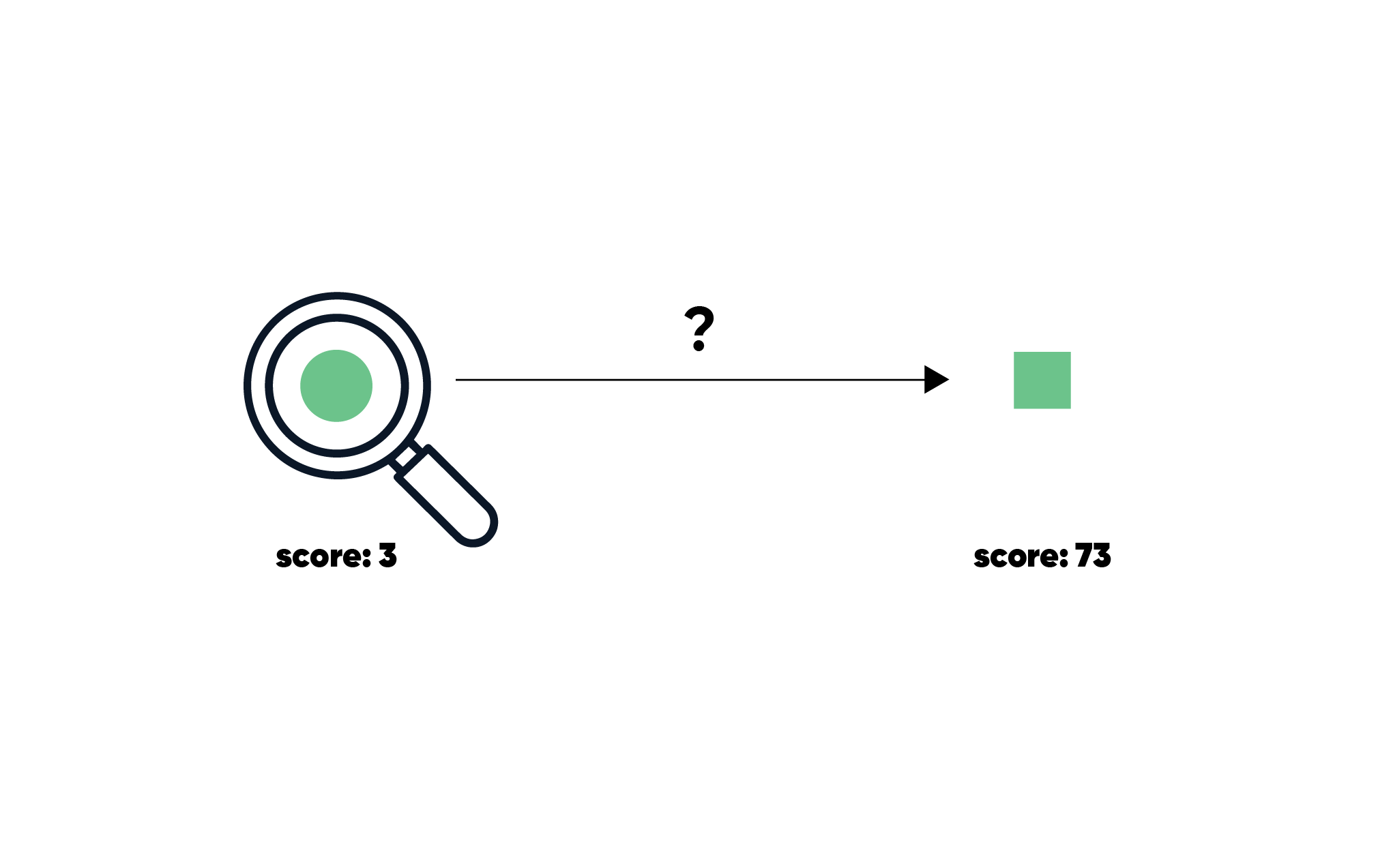

Causal discrimination

The previous principles each looked at the different groups. We can also look at the individual; this is then called individual fairness. With causal discrimination we can check, for example, whether someone with the same characteristics receives a completely different score. If this is the case for a characteristic that is legally protected, such as gender or ethnicity, then your algorithm is not fair and correct.

The workshop is based on the fairness notions as described by Makhlouf et al. (2021). Would you like to read more? You can find the article here.